Voice Anti-Spoofing

A lightweight Support Vector Machine (SVM) based anti-spoofing model which can distinguish between audio from a real human and a playback device

Note: All the data and processing techniques are proprietary to Renesas America Ltd., hence only limited non-sensitive information shall be disclosed as a part of the project description here.

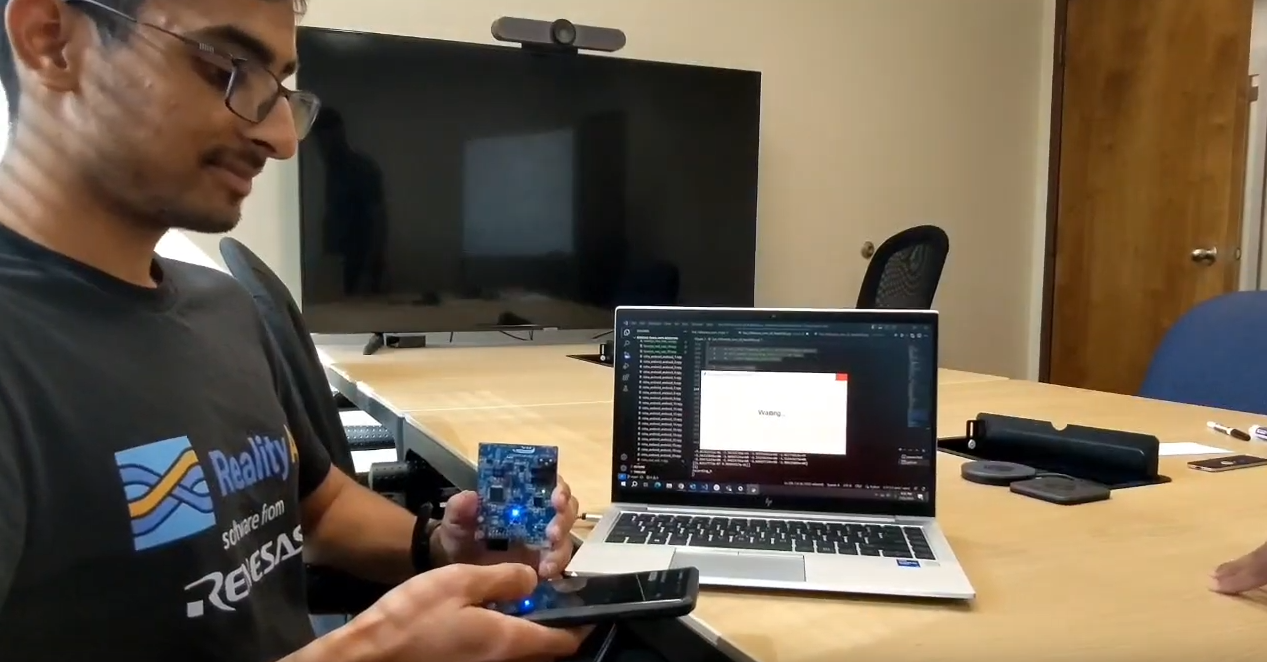

Click on the following image to get redirected to a demo video of the project:

INTRODUCTION

In this project I developed a voice authentication system for a Renesas voice board, capable of capturing voice samples and distinguishing between genuine and spoofed voices. By leveraging advanced signal processing techniques and machine learning algorithms, we aim to enhance security measures and ensure robust authentication protocols for Renesas voice boards.

KEY COMPONENTS

- Voice Capture Module:

- Utilize a Renesas voice board equipped with a microphone to capture voice samples.

- Utilized a pre-trained trigger mechanism to initiate voice capture upon detecting the phrase “Hi Renesas.”

- Data Collection and Processing:

- Collect voice samples from multiple individuals, including genuine utterances and spoofed playback.

- Store voice data for further analysis and feature extraction.

- Feature Extraction Pipeline:

- Develop a feature extraction pipeline to analyze voice samples and extract relevant features.

- Explore different feature extraction techniques to capture unique characteristics of each voice.

- Machine Learning Model Training:

- Train a Support Vector Machine (SVM) model using the extracted features.

- Implement a classification algorithm capable of distinguishing between genuine and spoofed voices.

- System Integration:

- Integrate the voice authentication system with the Renesas voice board for live testing.

PROJECT WORKFLOW

- Voice Capture:

- Renesas board detects trigger phrase “Hi Renesas” and initiates voice capture.

- Voice samples are recorded and stored for analysis.

- Data Collection:

- Gather voice samples from diverse individuals to create a comprehensive dataset.

- Include both genuine utterances and spoofed playback for training and testing purposes.

- Feature Extraction:

- Analyze voice samples using signal processing techniques to extract relevant features.

- Transform raw voice data into a feature vector representation suitable for machine learning algorithms.

- Model Training:

- Train an SVM model using the extracted features to classify between genuine and spoofed voices.

- Validate model performance using cross-validation techniques and optimize hyperparameters for improved accuracy.

- System Evaluation:

- Integrate the trained model with the Renesas board and playback devices for real-world testing.

- Evaluate system performance by testing with a diverse range of voice samples and spoofing attempts.

CONCLUSION

This project demonstrates the potential of using Renesas boards for implementing advanced voice authentication systems. By leveraging signal processing techniques and machine learning algorithms, we aim to develop a robust and reliable system capable of accurately distinguishing between genuine and spoofed voices. With further refinement and testing, our voice authentication system holds promise for enhancing security measures in various applications, including access control, authentication, and identity verification.