American Sign Language Detection

A CNN and LSTM based American Sign Language (ASL) Detector for Letters from video feeds

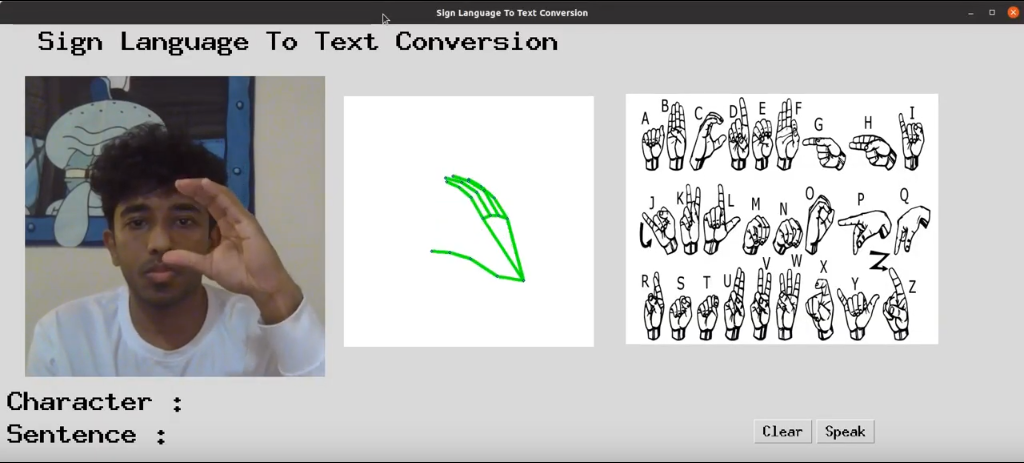

DEMO

A demo showcasing the ASL model along with the GUI interface is available below:

INTRODUCTION

-

Communication Gap: There exists a fundamental barrier in communication between ASL users and individuals unfamiliar with ASL. This gap can lead to misunderstandings, exclusion from conversations, and overall difficulties in social and professional interactions for ASL users.

-

Variability in Signing Styles: Similar to spoken languages, ASL exhibits individualized styles. Different ASL users may have unique ways of signing, including variations in hand shapes and movements. These nuances can challenge even experienced interpreters or other ASL users to understand every individual’s signing style.

-

Complexity of Sign Languages: ASL encompasses more than just hand gestures; it also includes non-manual components such as facial expressions and body movements. These elements are integral to conveying accurate information and emotions, adding layers of complexity to the language.

This underscores the need for an ASL recognition AI model capable of successfully detecting different gestures. This project presents a model designed to interpret ASL by analyzing gesture video frames. It processes the visual information of ASL signs and translates them into English words. This approach has the potential to standardize the interpretation of various signing styles, thereby making ASL more accessible to non-users.

OBJECTIVE

The primary objective of this project is to develop an accurate and efficient ASL detection system using a combination of CNN and LSTM layers. By analyzing gesture video frames, we aim to accurately translate ASL signs into English words, thereby bridging the communication gap between ASL users and non-users.

METHODOLOGY

-

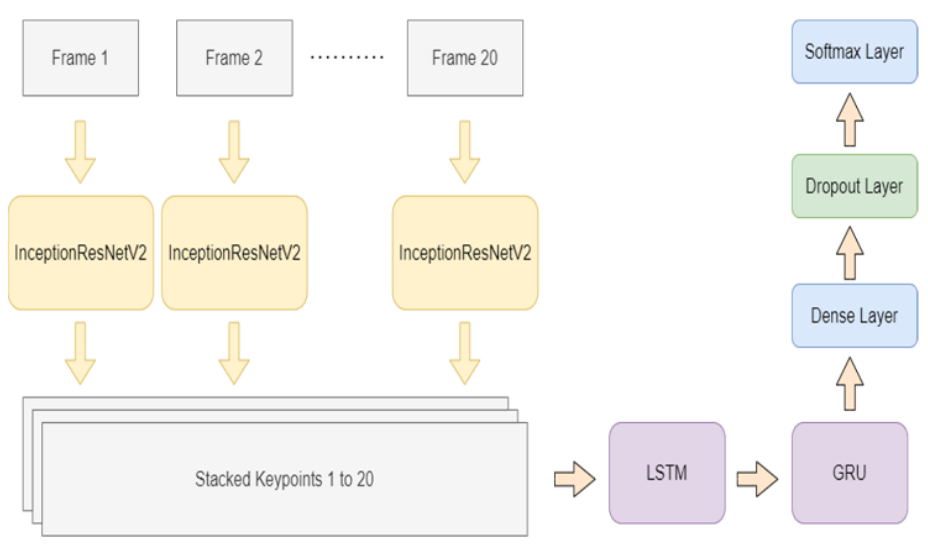

Feature Extraction: Feature vectors are extracted using InceptionResNetV2, which are then passed to the model for further processing.

-

Object Classification: Video frames are classified into objects using InceptionResNetV2. Subsequently, key points are created and stacked for video frames.

-

Model Architecture: The model consists of LSTM and GRU layers arranged in a sequential manner to capture semantic dependencies effectively. Dropout is employed to reduce overfitting and improve generalization ability.

-

Training: The model is trained using appropriate hyperparameters and the softmax function is utilized to obtain the final output.

RESULTS

The trained ASL recognition model demonstrates promising results in accurately detecting and translating ASL gestures into English words. By leveraging CNN and LSTM layers, the model effectively captures the nuances of ASL, including variations in signing styles and non-manual components such as facial expressions and body movements.

CONCLUSION

In conclusion, this project highlights the effectiveness of CNN and LSTM-based models in ASL detection tasks. By accurately interpreting ASL gestures from video feeds, this technology has the potential to significantly improve communication and accessibility for ASL users in various social and professional settings. Further advancements in this field could lead to even greater inclusivity and understanding between ASL users and non-users.